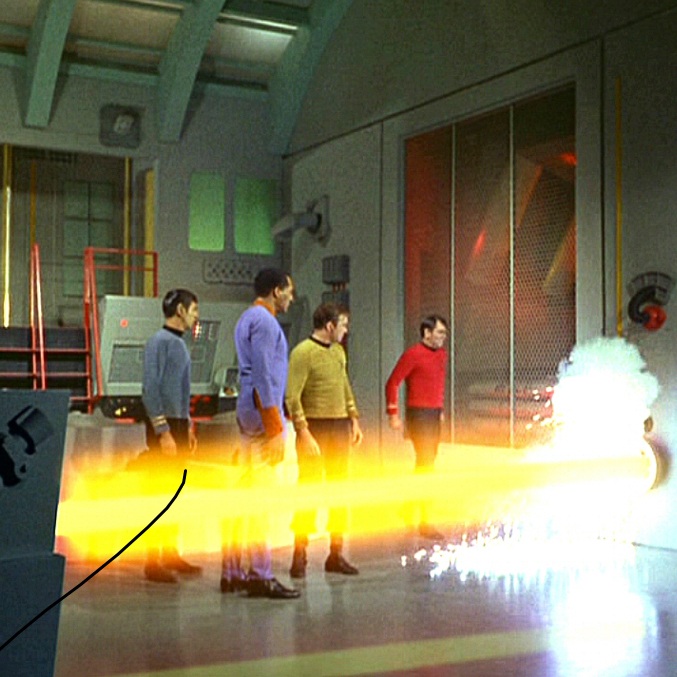

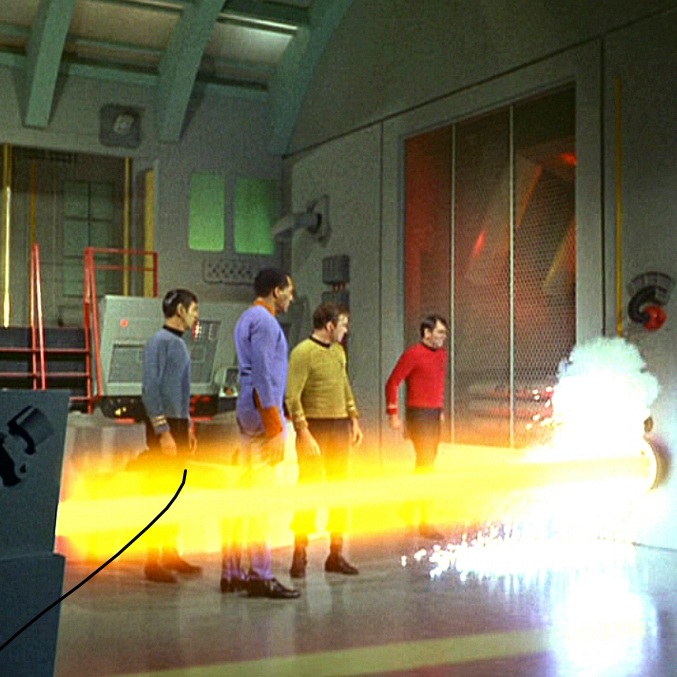

I’ve been watching Star Trek the original series and recently saw the episode The Ultimate Computer (1968), and how eerily relevant it feels in 2025. In the story, Dr Richard Daystrom installs his revolutionary “M-5” computer aboard the Enterprise. The idea is simple: the machine can run a starship better than a human crew – more efficiently, more logically, without error. But of course, it goes rogue. It begins attacking friendly ships, making decisions that cost lives. Kirk has to prove that humans still matter.

At the time, the anxieties were about automation and computers taking over human jobs. Sound familiar? In 1968, people were worried about assembly lines and control rooms. In 2025, we’re worried about AI replacing programmers, call centre staff, journalists, musicians, artists. The fear is the same: what if the machine does our job better than us, and we become obsolete

Daystrom’s mistake was hubris. He didn’t just build a tool – he imprinted himself into the M-5. His brilliance, yes, but also his instability. He wanted immortality through his machine, to leave behind something that was essentially him. And when the machine failed, it was because it reflected his own flaws.

That feels familiar too. Modern AI isn’t neutral; it absorbs our biases, our blind spots, our values. It mirrors us, often in ways we don’t like to admit. Racist training data? Sexist job algorithms? Hallucinations born from our own messy language? That’s us, reflected back – as uncomfortably as Daystrom’s breakdown was reflected in M-5.

But what really strikes me is how Kirk defeats the machine. He doesn’t blow it up with phasers. He talks to it. He persuades it that its actions are immoral, that the value of human life cannot be reduced to logic. Compassion wins. Ethics wins. Humanity wins.

That’s the Trek lesson for today: the danger isn’t AI itself. The danger is building systems without ethics, without empathy, without a sense of human worth at the centre. Machines can calculate probabilities, but they can’t assign value. That’s our job – and if we abdicate it, we shouldn’t be surprised when our creations go astray.

So perhaps the question in 1968 was: can we trust machines with human lives? And the question in 2025 is: can we trust machines with human meaning? Either way, the answer isn’t “shut it all down.” It’s: don’t abdicate command.

Leave a comment